The usual setup (documented by Microsoft) of version control for F&O is using Team Foundation Version Control and configuring the workspace mapping like this:

| $/MyProject/Trunk/Main/Metadata | k:\AosService\PackagesLocalDirectory |

| $/MyProject/Trunk/Main/Projects | c:\Users\Admin123456789\Documents\Visual Studio 2019\Projects |

This means that PackagesLocalDirectory contains both standard packages (such as ApplicationSuite) and custom packages, i.e. your development and ISV solutions you’ve installed.

This works fine if you always want to work with just a single version of your application, but the need to switch to a different branch or a different version is quite common. For example:

- You merged code to a test branch and want to compile it and test it.

- You found a bug in production or a test environment and you want to debug code of that version, or even make a fix.

- As a VAR, you want to develop code for several clients in on the same DEV box.

It’s possible to change the workspace to use a different version of code, but it’s a lot of work. For example, let’s say you want to switch from Dev branch to Test to debug something. You have to:

- Suspend pending changes

- Delete workspace mapping

- Ideally, delete all custom files from PackagesLocalDirectory (otherwise you have to later deal with extra files that exist in Dev branch but not Test)

- Create a new workspace mapping

- Get latest code

- Compile custom packages (because source control contains just source code, not runnable binaries)

When you’re done and want to continue with the Dev branch, you need to repeat all the steps again to switch back.

This is very inflexible, time-consuming, and it doesn’t allow you to have multiple sets of pending changes. Therefore I use a different approach – I utilize multiple workspaces and switch between them.

I never put any custom packages to PackagesLocalDirectory. Instead, I create a separate folder for my workspace, e.g. k:\Repo\Dev. Then PackagesLocalDirectory contains only code from Microsoft, k:\Repo\Dev folder contains code from Dev branch, k:\Repo\Test code from Test branch and so on.

Each workspace has not just its version of the application, but also a list of pending changes. It also contains binaries – I need to compile code once when creating the workspace, but not again when switching between workspaces.

F&O doesn’t have to always use PackagesLocalDirectory and Visual Studio doesn’t have to have projects in the default location (such as Documents\Visual Studio 2019\Projects). The paths can be changed in configuration files. Therefore if I want to use, say, the Test workspace, I tell F&O to take packages from k:\Repo\Dev\Metadata and Visual Studio to use k:\Repo\Dev\Projects for projects. Doing it manually would be time-consuming and error-prone, therefore I do it by a script (see more about the scripts at the end).

If my workspace folder contains just custom packages, I can use it for things like code merge, but I couldn’t run the application or even open Application Explorer, because custom code depend on standard code, which isn’t there. I could copy standard packages to each workspace folder, but I like the separation, it would require a lot of time and disk space and I would have to update each folder after updating the standard application.

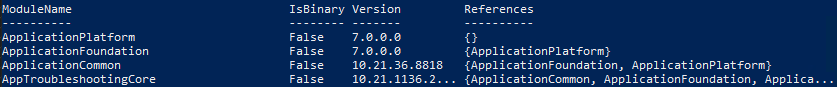

For example, I could take k:\AosService\PackagesLocalDirectory\ApplicationPlatform and copy it to k:\Repo\Dev\Metadata\ApplicationPlatform. But I can achieve the same goal by creating a symbolic link to the folder in PackagesLocalDirectory. Of course, no one wants to add 150 symbolic links manually – a simple script can iterate the folders and create a symbolic link for each of them.

A few more remarks

- We’re usually interested in the single workspace used by F&O, but note that some actions can be done without switching workspaces. For example, we can use Get Latest to download latest code from source control (it’s even better if the folder isn’t used by F&O/VS, because no files are locked), merge code and commit changes, or even build the application from command line.

- If you use Git, branch switching is easier, but you’ll still likely want to keep standard and custom packages in separate folders.

- Before applying an application update from Microsoft, it’s better to tell F&O to use PackagesLocalDirectory. If you don’t do it and there is a new package, it’ll be created in the active workspace and other workspaces couldn’t see it. You’d have to identify the problem and move the new package to PackagesLocalDirectory.

- If a new package is added, you’ll also need to regenerate symbolic links for your workspaces.

- You can have multiple workspaces for a single branch. For example, I use a separate workspace for code reviews, so I don’t have to suspend the development I’m working on.

Scripts

The scripts are available on GitHub: github.com/goshoom/d365fo-workspace. Use them as a base or inspiration for your own scripts; don’t expect them to cover all possible ways of working.

When you create a new workspace folder, open it in Powershell (with elevated permissions) and run Add-FOPackageSymLinks. Then you can tell F&O to use this folder by running Switch-FOWorkspace. If you want to see which folder is currently in use, call Get-FOWorkspace.

You can also see documentation comments, and the actual implementation, inside D365FOWorkspace.psm1.