Last time, I mentioned using of GitHub Copilot for X++ development, but I didn’t realize that not everyone is aware of the option of using GitHub Copilot in Visual Studio. A lot of examples on Internet shows it in Visual Studio Code and don’t mention Visual Studio at all, which may be confusing.

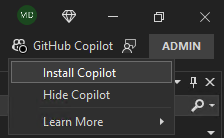

To use GitHub Copilot in Visual Studio, you need Visual Studio 2022. And ideally version 17.10 or later, because GitHub Copilot is integrated there as one of the optional components of Visual Studio. Because it’s optional, you may need to run the VS installer and add the component. To make is easier for you, VS offers a button to start the installation:

You can also add GitHub Copilot to older versions of VS 2022 as extensions, but simply using a recent version of VS makes a better sense to me. You can learn more in Install GitHub Copilot in Visual Studio, if needed.

You’ll also need a GitHub Copilot subscription (there is a one-month trial available) and be logged in GitHub in Visual Studio.

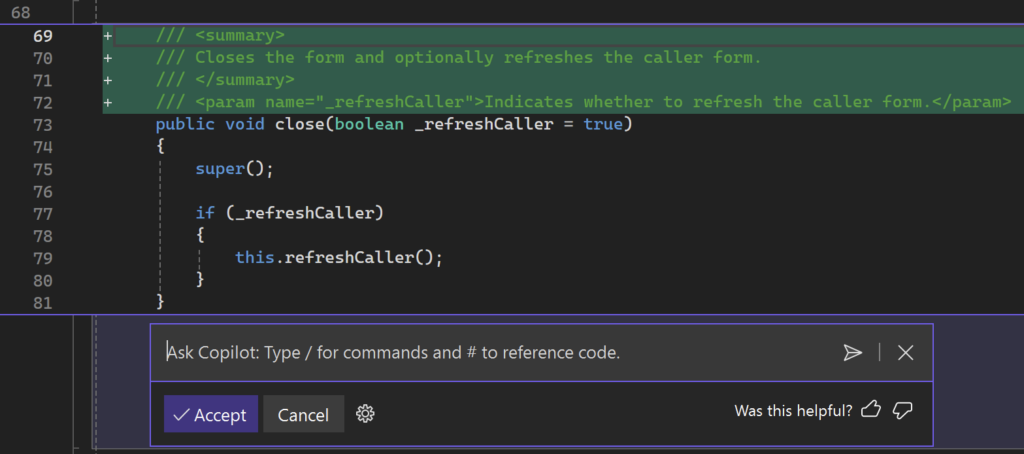

Then you’ll start getting code completion suggestion, can use Alt+/ shortcut inside the code editor to interact with Copilot, chat with the Copilot and so on.