I’ve recently run into a problem with Recurring Integrations Scheduler and I think I won’t be the only one, therefore I’m going to explain it here.

The Recurring Integrations Scheduler (RIS) supports both the old recurring integrations API (enqueue/dequeue) and the new package API, which is the recommended approach. With the package API, you can either import whole packages (which you must prepare by yourself in some way) or you can give RIS actual data files (XML, CSV…) and let it build packages for you. That’s the scenario I’m talking about below.

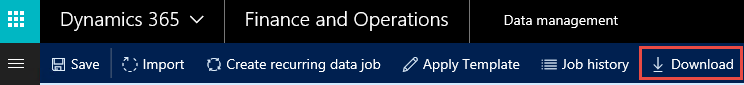

If you want RIS to create packages, you must give it a package template. Go to the Data Management workspace in AX 7, create an import job, configure it as you like and then download the package. I’m using the Customer groups entity as an example.

You’ll get a file with a GUID as the name, such as {99DD43E4-936E-4402-80E6-1477013A5275}.zip. Feel free to rename it; I’ll call it CustGroupImportTemplate.zip.

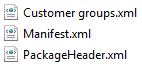

This is its content:

You can see one data file for the entity (Customer groups.xml in my case) plus some metadata defining the import project.

It’s not exactly what’s needed, as we’ll see later, but let’s continue for now with this package.

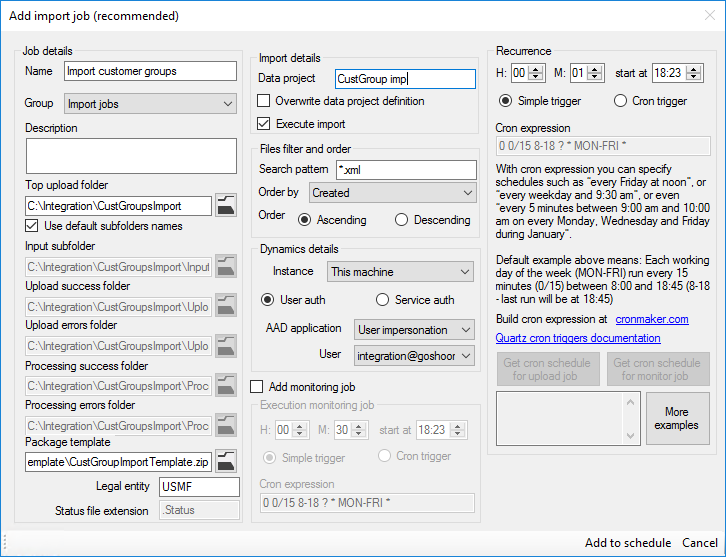

Go to RIS and configure an import job. Don’t forget to set Package template to the package file.

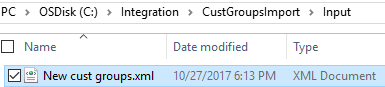

Make sure the job is running, create an input file in the right format and put it into the input folder.

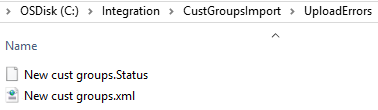

Unfortunately the import fails and you’ll find these files in the Processing errors folder:

The status file merely says that StatusCode = BadRequest and events logs for RIS (Applications and Services Logs > Recurring Integrations Scheduler) don’t reveal anything else either. More useful event logs are under Applications and Services Logs > Microsoft > Dynamics > AX-OData Service, where you can find something like this:

System.InvalidOperationException: Exception occurred while executing action ImportFromPackage on Entity DataManagementDefinitionGroup: The file ‘C:\windows\TEMP\005DE7CC-C7E8-410D-8690-7A63C30224BF_FF5EE0BE-205C-48BC-8987-96C007CE74ED\Customer groups.xml’ already exists.

A unique folder name is generated for each import, therefore the problem isn’t related to existing files in the Temp folder.

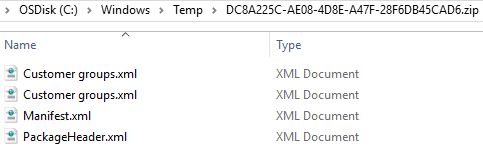

To make it short, the problem is in the package file itself, which you unfortunately never see, because it’s automatically deleted on failure. If you captured it and looked inside, you would find this:

There are literally two files with the same name and therefore the attempt to unpack the archive fails on the second instance.

How does this happen?

One file is coming from the package template. The other is the input file, which RIS renamed to match the entity name and added to the archive. Having files with same names is supported by ZIP, but the library used by RIS for unpacking isn’t able to handle it.

To get it working correctly, you must remove the data file (Customer groups.xml) from the package used as a template. A better solution, though, would be modifying RIS to replace the file instead of attaching another file with the same name.

Thanks for this post. Did the updated version with a fix get released yet? As I have noticed, removing the file from the template doesn’t work either. I had used .csv file instead of .xml

Either you didn’t apply the fix correctly, or you have an additional problem there.

I don’t know if the code was updated; simply look at history on GitHub.

Hi Martin,

Really like your post and answer on Dynamics 365 Community – an i believe you can help me.

My customer needs to integrate D365FO with an on premise system.

The requirements are

A. integration should work synchronously – or near real time.

B. Integration direction is from D365FO to On prem file system (creating a file) and to an on prem Access DB (CRUD Access Table records)

Examples:

when a production work order is started on D365FO – a text File with Production information should be created on the Customers On prem file system to be picked up by the production robots.

when a production work order RAF table records on a on Premise Access Table DB needs to be Updated/created. this DB is used by an Automatic WH system.

The only way I could think about is creating some kind of on Premise “integration scheduler” that will run every 10 sec and will get updated information (from new data entitys) for creating the production file or updating the access DB Tables. – I really don’t like this solution.

I understand that there are other approach for achieving this using application proxy or data gateway but could not find the best way or an example of how to implement it for my scenarios.

Can you please share your knowledge or an simple example of how can I use application proxy or data gateway for creating a file or CRUD records on an on prem server coming from trigger in D365FO?

Thanks,

Gill

It’s not something that can be covered in full in a few sentences. If you need more details, start a discussion in a discussion forum.

If you want to create a file in a local system based on an event in D365FO, consider using business events as the trigger and utilize Flows or Logic Apps to create the file. The File System connector, together with a data gateway, will allow you to write the file on premises.

Or maybe you would like to bypass files completely, but I suspect you would have to write your own web service (running on-premises and pushing data to Access). I’m not expert on Access, though.

Hi Martin,

I tried to import a customer group data file by following the instructions in https://github.com/Microsoft/Recurring-Integrations-Scheduler/wiki. But ran into below error.

“System.InvalidOperationException: Exception occurred while executing action ImportFromPackage on Entity DataManagementDefinitionGroup: Could not find file ‘C:\Users\Usera6a2555507d\AppData\Local\Temp\2\D9866373-FB13-4D42-9C9E-3780CB66F02D_5CDA7525-C2DA-4C5D-8E06-B9EDFB8DED8C\PackageHeader.xml’. at Microsoft.Dynamics.Platform.Integration.Services.OData.Action.ActionInvokable.Invoke() at Microsoft.Dynamics.Platform.Integration.Services.OData.Update.UpdateProcessor.ActionInvocation(ChangeOperationContext context, ActionInvokable action) at Microsoft.Dynamics.Platform.Integration.Services.OData.Update.UpdateManager.c__DisplayClass14_0.b__0(ChangeOperationContext context) at Microsoft.Dynamics.Platform.Integration.Services.OData.Update.ChangeInfo.ExecuteActionsInCompanyContext(IEnumerable`1 actionList, ChangeOperationContext operationContext) at Microsoft.Dynamics.Platform.Integration.Services.OData.Update.ChangeInfo.ExecuteActions(ChangeOperationContext context) at Microsoft.Dynamics.Platform.Integration.Services.OData.Update.UpdateManager.SaveChanges() at Microsoft.Dynamics.Platform.Integration.Services.OData.AxODataDelegatingHandler.d__3.MoveNext()”.

Can you please give me some suggestions?

Thanks